How Boston Dynamics upgraded the Atlas robot — and what’s next

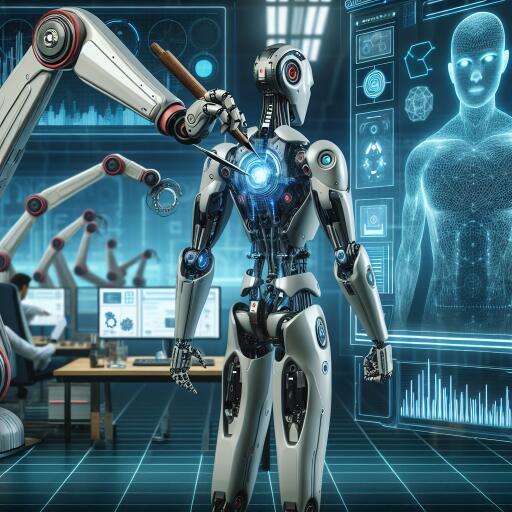

Just a few years ago, Boston Dynamics’ humanoid Atlas drew attention for its parkour demos but still moved like a machine. The latest generation flips that perception on its head. Atlas now cartwheels, dances, and runs with a striking, human-like flow. It can twist its arms, head, and torso through complete rotations, pivot its upper body to change direction without turning its legs, and even spring up from the floor using only its feet — a move no human could pull off.

From stiff steps to full-body flow

The most visible leap is range of motion. Instead of turning around to reverse course, Atlas simply rotates its upper torso 180 degrees and keeps going. Joints in the limbs, torso, and head can rotate continuously, enabling athletic transitions that look more like choreography than robotics. That fluidity isn’t just for show: it’s about allowing the robot to work in tight spaces, reach awkward angles, and recover from slips with agility.

Engineering out the weak points

One subtle but crucial redesign makes those acrobatics practical: the elimination of cables and wires running across rotating joints. In many robots, those embedded lines are a common failure point as repeated motion wears them down. By rethinking the internal routing so no conductors cross those spinning axes, Atlas gains durability and easier serviceability, while unlocking true 360-degree articulation. In short, fewer fragile parts, more flexibility.

A smarter brain, trained the hands-on way

Under the hood, Atlas’ control stack is now powered by Nvidia hardware, enabling heavier AI workloads on board. But the way the robot learns is just as important as the chips inside. A human operator can inhabit the robot’s perspective through virtual reality gear, demonstrating tasks repeatedly until Atlas reproduces them on its own. Think of it as performance capture for robots: show, refine, repeat.

In practice, this teleoperation loop has been used to teach Atlas everyday manipulations like stacking cups or tying a simple knot. Each repetition feeds data to the robot’s learning system, helping it discover how to sequence motions, stabilize its body while reaching, and correct mistakes. Over time, the goal is for Atlas to generalize from these examples and handle slight variations without being hand-held.

Hands that adapt on the fly

Manipulation is where humanoids often stumble, and Atlas tackles it with a fresh approach. Each hand uses three digits that can swing into different configurations. One mode behaves like a two-finger pinch for small, precise objects; another reorients a digit to mimic a thumb for more human-like grasps; and a wide stance helps scoop or carry larger items. Tactile sensors in the fingertips feed pressure and contact information to the neural network, guiding how firmly to hold and when to adjust to avoid slips or crushing delicate objects.

Teleoperation still has room to grow

Even with better hands and clever training, the hard part isn’t only moving to the right place — it’s applying the right force at the right moment. Fine-grained control of grip strength, motion timing, and contact dynamics remains an open challenge. Expect continued work on haptics, control policies, and feedback systems so operators can teach tasks more naturally and robots can execute them more reliably without supervision.

The hype curve vs. reality

Humanoid robots are basking in a wave of attention, with forecasts of millions (or more) working alongside us. Reality is more methodical. Software can iterate quickly, but turning breakthroughs into dependable machines takes time. To reach everyday deployment, robots must be robust, safe, and cost-effective — not just spectacular in demos. That means more testing, more endurance work, and manufacturing at scales that bring costs down.

Why it matters for VR and interactive tech

For gaming and VR enthusiasts, Atlas hints at a powerful crossover: virtual tools training physical skills. Teleoperation via VR doesn’t just make for cool videos; it becomes a practical pipeline to encode human intuitions about balance, timing, and dexterity into a robot’s behavior. Imagine building training scenarios with game-like interfaces, then watching those skills manifest in the real world. As simulation and mixed reality improve, we’ll see tighter loops between “playing” a task and deploying it on hardware.

Short term, Atlas will likely spend more time in labs and controlled trials, refining manipulation and reliability. Long term, the blend of high-mobility bodies, versatile hands, and VR-driven teaching could push humanoids into roles that benefit from human-like reach and movement: logistics, industrial support, and eventually service tasks. The headline-grabbing flips are fun — but the real story is the plumbing that makes a robot both agile and dependable.

Atlas’ new chapter isn’t just about stronger legs or smarter code; it’s a blueprint for how robots will be built and taught in the years ahead. Superhuman range of motion, fewer mechanical pain points, AI that learns from human demonstrations, and hands that reconfigure on demand — these are the ingredients for turning humanoids from viral sensations into everyday tools. The next step is proving they can do it day after day, safely and affordably.