Examining speakers’ subjective and bio-behavioral responses to audience-induced social-evaluative threat via immersive VR – Scientific Reports

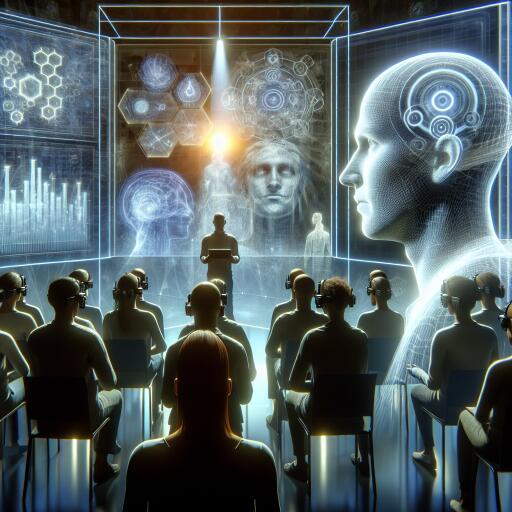

Public speaking is equal parts performance and psychology, and few stages feel as high-stakes as facing a skeptical crowd. A new immersive virtual reality (VR) study puts that pressure under the microscope, showing how the mood of an audience can measurably shape the way speakers feel, sound, and behave—all without ever leaving a headset.

What the researchers set out to test

The study used a VR-powered presentation scenario to compare two conditions: delivering a scientific talk to a crowd that appears engaged and supportive versus one that is visibly detached or critical. Because the entire setting was virtual, the team could control audience behavior precisely while capturing a rich set of signals from the speaker.

Data collection spanned multiple layers of performance and physiology:

- Behavioral markers such as eye gaze, vocal characteristics, and body movement openness/expressiveness

- Physiological activity including heart rate, breathing rate, pupil dilation, and electroencephalography (EEG)

- Self-reports of emotion, arousal, anxiety, and perceived cognitive/social effort

The impact of an unsupportive audience

Facing a cold room didn’t just feel worse—it changed how people spoke. Compared to the supportive condition, the unsupportive virtual audience was associated with:

- Higher negative affect and elevated arousal and anxiety

- Greater perceived mental and social effort to continue the talk

- A measurable slowdown in speaking rate

Acoustic analysis added nuance: voices carried signs of heightened emotional activation and greater vocal dominance in the unsupportive condition. In other words, while speakers slowed down, their delivery took on a more forceful edge—potentially an unconscious attempt to regain control or authority when they sensed resistance.

Why VR is a game-changer for studying social stress

Traditional public speaking research often relies on staged audiences or lab-based tasks with limited ecological validity. VR offers a controlled yet lifelike arena where audience behavior—eye contact, posture, micro-gestures, even subtle fidgets—can be scripted and synchronized with precise measurements of the speaker’s body and voice. That combination lets researchers connect how a room “feels” with how a speaker actually performs, moment to moment.

Crucially, the approach marries subjective reports with bio-behavioral signals. It’s one thing for a speaker to say they felt uneasy; it’s another to see that discomfort register across their pacing, vocal energy, and physiological responses. By capturing both, VR helps unpack the complex loop between audience feedback, perceived threat, and communication style.

Implications for training, streaming, and esports

Beyond academia, the findings have clear implications for skill-building in interactive performance:

- Public speaking and corporate training: Simulated audiences can be tuned to challenge specific weaknesses—pacing under pressure, managing anxiety spikes, or maintaining clarity amid perceived hostility.

- Content creators and streamers: Live chat can feel like a volatile crowd. VR rehearsal against “difficult” audiences may help creators preserve tone, tempo, and confidence when feedback turns negative.

- Casters and competitive players: Tournament pressure carries social-evaluative threat. Practicing with adaptive VR crowds could help commentators and captains manage arousal without sacrificing clarity or strategic calls.

What stood out in the signals

The unsupportive audience didn’t just nudge one metric—it shifted the system. Participants reported feeling more strained and anxious, their speaking tempo slowed, and their voice acoustics signaled heightened emotional load alongside a push toward dominance. While the study monitored heart rate, breathing, pupil dilation, and EEG, the key takeaway is that multiple channels—self-report, behavior, and physiology—aligned to paint a consistent picture of social stress reshaping performance.

Where this tech could go next

Because VR lets developers manipulate audience behavior with surgical precision, future systems could become real-time coaches. Imagine a rehearsal tool that monitors your vocal energy, gaze distribution, and breathing patterns, then dynamically shifts audience reactions to push targeted improvements—softening when you spiral, challenging when you plateau, and surfacing instant feedback on pacing and delivery.

There’s also room to personalize stress exposure. Some users may benefit from gradually increasing social-evaluative difficulty—moving from receptive to indifferent to confrontational crowds—while tracking progress across bio-behavioral markers. Others might focus on a single dimension, like stabilizing tempo under pressure or curbing overdominant tone when anxiety spikes.

The bigger picture

This work underscores a simple truth: audience energy matters, and speakers respond with their whole body and voice. VR provides the sandbox to study that dynamic safely, repeatedly, and at scale. For anyone who performs in front of others—on stage, on stream, or in the boardroom—the path to better communication may run through a headset that can simulate the toughest room you’ll ever face, then teach you how to thrive in it.