Three Years On, How Accurate Are AI Chatbots?

Three years after ChatGPT’s public debut on November 30, 2022, AI chatbots—better known as large language models (LLMs)—have gone from curiosity to everyday tools. They draft emails, summarize long reports, debug code, and field increasingly nuanced questions. The leap in capability is real. But so is the lingering question users keep asking: Can we trust their answers?

What’s improved since 2022

Modern LLMs handle multi-step prompts more coherently, distill large volumes of information into readable summaries, and assist developers with boilerplate code and refactoring. They’re faster, more steerable, and better at following instructions. Tool integrations and retrieval features, when available, can anchor responses to documents or the web, reducing the chance of a model “making things up.”

What still goes wrong

Despite these gains, familiar weak spots remain: hallucinated facts, misread context, and overconfident answers. Models can cite sources that don’t back their claims—or that don’t exist—conflate similar entities, and omit crucial caveats. These are not merely edge cases; they’re systemic risks in probabilistic text generators that predict plausible wording rather than verify truth by default.

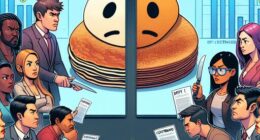

What the latest newsroom audit found

A study by the European Broadcasting Union (EBU) and the BBC, highlighted by Statista’s Tristan Gaudiat, offers a snapshot of current accuracy. Between May and June 2025, a cohort of journalists evaluated outputs from popular free chatbots—ChatGPT, Gemini, Copilot, and Perplexity. They found that 48 percent of responses contained accuracy issues. Within that, 17 percent were significant problems, most often tied to sourcing errors and missing context.

There is progress compared to late 2024. In December 2024, based on a smaller sample of answers, the same four LLMs produced inaccurate responses 72 percent of the time, with 31 percent categorized as major issues. The decline in error rates suggests that model updates, better safeguards, and prompt refinements are making a difference. Still, “nearly half” inaccurate is a sobering figure—especially for users who might assume parity with search engines or expert advice.

Why accuracy remains hard

- Probabilistic outputs: LLMs generate the most likely next words, not validated facts. Plausible wording can mask subtle inaccuracies.

- Training data limits: Models learn from imperfect, sometimes conflicting data and may propagate those inconsistencies.

- Context handling: Long prompts, multi-turn chats, and ambiguous questions can lead to misinterpretation or loss of crucial details.

- Source grounding: Without reliable retrieval or explicit citations, claims may lack verifiable support.

High-stakes domains demand caution

In fields like healthcare, legal services, and education, the cost of an incorrect or incomplete answer can be substantial. Hallucinated citations in a patient care plan, a misapplied statute in legal guidance, or a missing caveat in a historical summary can mislead even vigilant readers. These systems can be valuable assistants, but they’re not replacements for clinicians, attorneys, or educators—and they should not be treated as such.

How to use chatbots responsibly today

- Verify claims: Cross-check important facts with primary sources or trusted references before acting.

- Ask for sources: Request citations and inspect whether they truly support the answer. Be wary of invented or mismatched references.

- Be specific: Clear, scoped prompts help models stay on target and reduce the chance of filling gaps with guesswork.

- Break down tasks: Complex queries often yield better results when split into smaller steps with intermediate checks.

- Use retrieval when available: Features that ground responses in your documents or the web can improve traceability—though they still require scrutiny.

- Keep humans in the loop: Treat the model as a drafting tool or second opinion, not a final arbiter.

The bottom line

Three years in, AI chatbots are undeniably more capable and, on average, more accurate than they were at launch. The EBU/BBC findings show meaningful improvement from late 2024 to mid‑2025. Yet accuracy gaps persist: in recent newsroom testing, nearly half of responses still showed issues, and a notable share had serious sourcing or context errors.

The trajectory is positive, but reliability remains a work in progress. As developers push the frontier, users—especially in high-stakes scenarios—should pair these tools with rigorous verification and expert oversight. The promise is real; so are the limits.