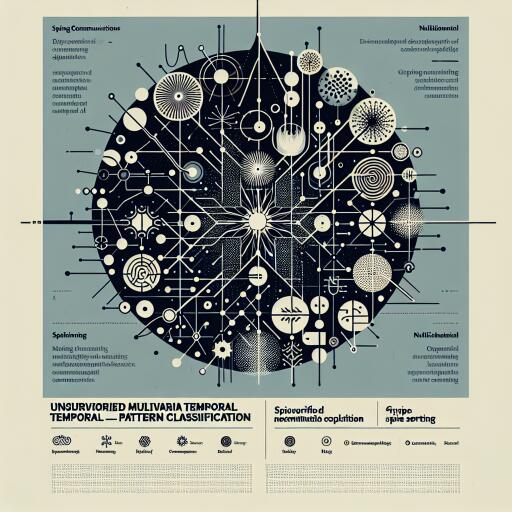

A frugal Spiking Neural Network for unsupervised multivariate temporal pattern classification and multichannel spike sorting – Nature Communications

As neural interfaces scale to thousands of channels, making sense of their relentless data streams without blowing power budgets has become a central challenge. Researchers now report a remarkably simple, single-layer Spiking Neural Network (SNN) that learns and classifies multivariate temporal patterns entirely unsupervised—and does so in an online-compatible, ultra-frugal fashion. Validated on simulations, speech features, and multichannel neural recordings, the approach also delivers fully unsupervised multichannel spike sorting on both synthetic and real datasets, pointing toward future on-implant, ultra-low-power neural processing.

Why it matters

High-density electrode arrays can dramatically improve our view of the brain, but they also create a deluge of data. Extracting hidden spatiotemporal patterns—especially across multiple channels—remains difficult with conventional pipelines. For neuroprosthetics and basic neuroscience alike, discovering behaviorally relevant dynamics without labels and without expensive compute is a game changer. Spike sorting, a crucial preprocessing step to isolate single neurons, is especially challenging to embed in implants due to power and latency constraints. This work targets that gap: unsupervised, online-capable, and hardware-friendly learning at the edge.

What the team built

- A generic, single-layer SNN that learns multivariate temporal patterns from continuous streams without any labels.

- A neuron model based on a variant of Low-Threshold Spiking (LTS) dynamics that naturally adapts to the duration of incoming patterns—no proliferation of synapses or complex delay lines required.

- Local, biologically inspired learning via spike-timing–dependent plasticity (STDP) and intrinsic plasticity (IP), enabling self-configuration with minimal parameters.

How it differs from deep learning

Deep Neural Networks excel with labeled data, global loss minimization, and heavy compute—traits mismatched to implantable, always-on systems where labels are scarce and power is limited. Even self-supervised methods typically need supervised readouts downstream. DNNs also simulate time rather than natively computing with it. By contrast, SNNs operate on sparse spikes, learn locally at each synapse, and require fewer parameters. That makes them inherently more energy-efficient and better aligned with neuromorphic hardware—FPGAs today, emerging resistive-memory devices tomorrow. Crucially, this SNN runs fully unsupervised and is designed for online operation.

Overcoming limitations of past SNN approaches

Earlier SNNs showed promise in static vision and supervised temporal tasks, but general unsupervised multivariate pattern discovery remained elusive. Common bottlenecks included:

- Dependence on multi-layer, fully connected structures with large parameter counts.

- Reliance on spike order strategies that fail when patterns are nested or differ only in their endings.

- Use of fixed time windows that prevent true streaming operation.

- Learning synaptic delays via supervised methods.

The new design sidesteps these constraints. The LTS neuron variant provides adaptive temporal sensitivity in a single layer, avoiding heavy architectures or supervised delay learning. It preserves online processing without pre-segmentation.

Validation across modalities

The researchers tested the network in escalating complexity:

- Simulated multivariate signals to probe controlled temporal structure.

- Mel cepstral representations of speech sounds to assess naturalistic, time-varying audio patterns.

- Multichannel multiunit neural recordings to evaluate performance on real neural dynamics.

Across these settings, the SNN reliably discovered and categorized overlapping temporal motifs using only a small number of neurons. Most notably, the model performed multichannel spike sorting in a fully unsupervised, online-compatible mode on both synthetic and real datasets—eliminating the traditional detect–feature–cluster pipeline that often adds latency and computational load.

Spike sorting, simplified

Conventional spike sorting typically involves energy-hungry steps: detection, feature extraction, and clustering. Those stages are hard to miniaturize for implantable devices, especially as channel counts climb. Here, the SNN learns spike waveform patterns directly from the data stream and classifies action potentials on the fly—no labels, no post hoc clustering, and no handcrafted features. The result is a lean, streaming-friendly workflow that aligns with real-time operation and low-power budgets.

Frugality by design

Several design choices make this approach hardware-ready:

- Single-layer topology with few neurons and parameters.

- Sparse spike-based computation that minimizes memory traffic.

- Local learning rules (STDP/IP) eliminating global optimization and large memory footprints.

- Compatibility with neuromorphic and ultra-low-power platforms, such as low-power FPGAs and emerging resistive memory devices for plastic synapses.

Previous work by the team demonstrated online classification on FPGAs for single-channel signals; this study scales the concept to multichannel, multivariate temporal processing without sacrificing simplicity.

What’s next

By demonstrating robust, unsupervised recognition of high-dimensional temporal patterns with a frugal SNN, the authors open a path toward intelligent, autonomous neural interfaces. Future active implants could embed this kind of model to continuously discover relevant neural features, adapt to changing signals, and power next-generation neuroprosthetics—all without cloud connectivity or heavyweight silicon.

Beyond neural data, the same principles could extend to other sensor-rich domains where patterns unfold across channels and time—think biosignals, audio, tactile arrays, or industrial monitoring—whenever labels are scarce and power is precious.

Bottom line

This work showcases a practical middle ground between capability and efficiency: a single-layer, unsupervised SNN that learns from the stream itself, handles overlapping multivariate patterns, and performs multichannel spike sorting—all with a footprint suited to ultra-low-power hardware. It’s a compelling step toward truly embedded, real-time pattern recognition in the brain and beyond.