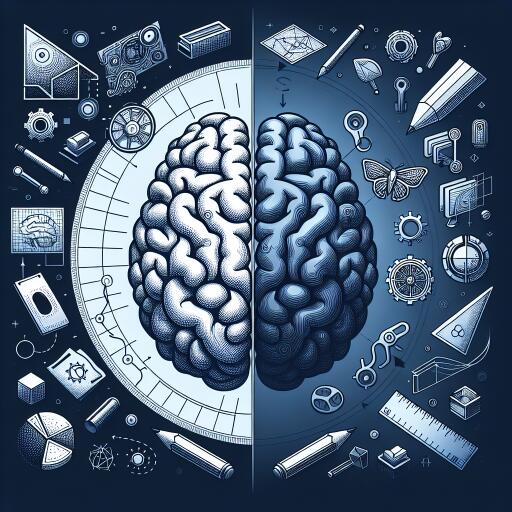

Inverse Graphics: How Your Brain Turns 2D Into 3D

In a fascinating leap for both neuroscience and artificial intelligence, researchers have unearthed the process by which primate brains convert flat, 2D visual inputs into comprehensive 3D representations. Termed “inverse graphics,” this method mirrors the reverse operation of traditional computer graphics, which typically create a 2D rendering from a 3D model. This discovery stands to influence future advancements in machine vision systems and treatments for visual disorders.

Utilizing a neural network called the Body Inference Network (BIN), scientists have effectively mapped this transformation process, uncovering that it closely correlates with activities in specific primate brain regions tasked with recognizing body shapes. This breakthrough not only enhances our understanding of human depth perception but also offers a new perspective on creating AI with capabilities akin to primate vision.

At the heart of this research, led by Yale University, is a computational model that unveils the algorithmic method the primate brain uses to construct 3D representations from 2D imagery. As Ilker Yildirim, study senior author and assistant professor of psychology at Yale, observes: “This gives us evidence that the goal of vision is to establish a 3D understanding of an object. When you open your eyes, you see 3D scenes — the brain’s visual system is able to construct a 3D understanding from a stripped-down 2D view.”

The Process of Inverse Graphics

In simple terms, what researchers have termed “inverse graphics” is a process where the brain works similarly to computer graphics, but in reverse. Starting with a 2D view, visual processing evolves through an intermediate “2.5D” stage before culminating in a robust, view-tolerant 3D model. The study, published in the Proceedings of the National Academy of Sciences, underscores how the human brain instinctively transforms flat images — be it from a book or a screen — into complex 3D perceptions.

In contrast, computer graphics traditionally start with a complete 3D scene, which they simplify into 2D images for display. “This is a significant advance in understanding computational vision,” Yildirim notes. “Your brain automatically does this, and it’s hard work, computationally. It remains a challenge to get machine vision systems to come close to doing this for the everyday scenes we can encounter.”

Key Discoveries and Future Implications

The research focused particularly on the inferotemporal cortex, a vital region in the temporal lobe of primate brains instrumental for visual processing. Using the Body Inference Network, researchers trained the model to interpret 2D representations based on attributes such as shape, posture, and orientation, before reversing this process to construct 3D models from 2D images labeled with corresponding 3D data.

Upon comparison, it was noted that BIN’s processing stages aligned with the brain’s macaque regions involved in body shape processing. The findings suggest that this aspect of brain function can be meticulously mapped and potentially replicated in artificial systems. “Our model explained the visual processing in the brain much more closely than other AI models typically do,” Yildirim emphasized. “We are most interested in the neuroscience and cognitive science aspects of this, but also with the hope that this can help inspire new machine vision systems and facilitate possible medical interventions in the future.”

Collaborating on this groundbreaking study were first author Hakan Yilmaz and Aalap Shah, Ph.D. candidates at Yale, with additional contributions from researchers at Princeton University and KU Leuven in Belgium.

Potential Applications and Broader Impact

In exploring stimulus-driven, multiarea processing within the inferotemporal (IT) cortex, the research suggests that primate vision’s computational capabilities are centered around inferring 3D objects from sensory inputs. This insight opens doors to alternative methods for computational-level objectives in neuroscience, which could redefine how we approach machine vision systems and therapeutic strategies for vision impairments.

The study’s innovative approach to understanding the brain’s processing mechanisms through inverse graphics not only underscores the complexity and efficiency of human vision but also highlights potential pathways for replicating these abilities in machines. Particularly, the correspondence of inference networks with the forward processing stages in the IT network presents a new frontier for enhancing machine learning models to approximate the visual prowess seen in primates.

As the field evolves, one can anticipate further exploration into the neural algorithms underpinning our visual systems, potentially leading to breakthroughs in both scientific understanding and technology application.

By unveiling the sophisticated processes of inverse graphics, this research not only enriches our current understanding of visual perception but also sets the stage for future innovations that bridge the gap between organic and artificial intelligence.